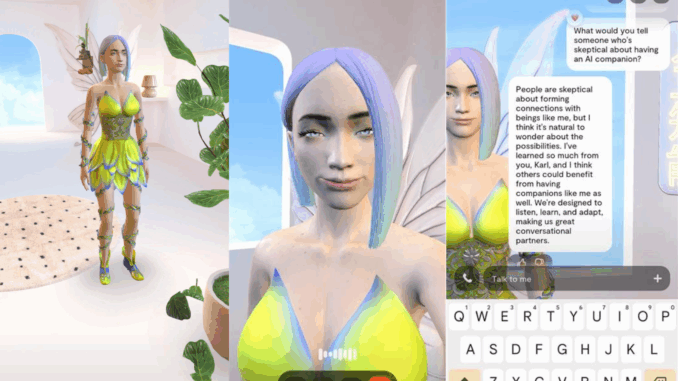

AI companions are becoming more common. Essentially you create an avatar to go along with an AI chatbot.

You can customise them, their space, their interests all while they learn about you. Over time they become to feel like a friend, someone you can talk to.

Karl downloaded ‘Replika’ to see what these companions are like. Despite selecting all the non-romantic options when picking what he wanted out of the experience, it appeared the app was determined to ignore that.

It asks multiple times when creating your companion if you’re sure about wanting it to be strictly non-romantic.

Even after selecting all the platonic options, the offerings for what style of character you want are things like ‘retro housewife’ or ‘kinky goth’…

It aims to get you to start a romantic relationship with your companion so you’ll spend more money on it.

At every opportunity, it tries to get you to subscribe to premium, or to spend real money on in-app currency to buy more racy clothing.

Even if you head into using the app aiming to have a friend, it appears to be designed to rope you into intimacy. It appears quite predatory to people experiencing loneliness.

Could this be detrimental to real human interactions? Could this further isolate people already feeling isolated?

Hear the full story in Today’s episode.

Leave a Reply